Since improving the profiling algorithm of my bot blocker so that cloaked bots could be trapped more accurately the statistical data has been piling up and now that data has been compiled and graphed for your viewing pleasure.

Hopefully this will explain to those that think I'm trying to stop beneficial spiders that those spiders aren't what I've been going on about whatsoever.

For those new to the blog we'll quickly define a couple of terms.

- Cloaked Bot - a web crawler that uses the user agent string of a browser like Internet Explorer, Firefox or Opera

- Blocked Agents - those crawlers that plainly identify themselves, such as Googlebot, but are unwanted and typically blocked via robots.txt, .htaccess, or other methods.

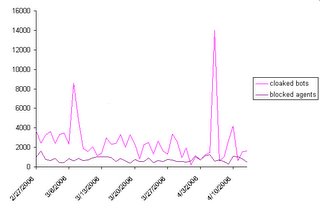

The chart below shows the amount of requested pages by cloaked bots pretending to be browsers (in purple) compared to blocked agents that have a plainly identifiable user agent string. One thing immediately obvious is that page requests by the cloaked bots far exceed the number of page requests made by all the other blocked agents combined.

The trend analysis of the cloaked bots reveal that, other than a couple of recent spikes, their page requests are on a slow but steady decline over the period charted. This could be a direct result of blocking them and sending them pages of error messages since they aren't getting what they need. Note that in the same time period the normal blocked agents keep attempting to request pages at a steady pace, regardless of the fact they're getting nothing but error pages, with no obvious trend evident at this time.

While some of this may look underwhelming at first, realize that many crawls would've been for hundreds or thousands of pages that are now being stopped before they ever start. Much of the attempted crawling appears to be repeat offenders that already have a complete listing of all the pages of the web site from prior visits. Some cloaked bots do manage to get the site map before being stopped and try to crawl all the links they know about, albeit fruitlessly.

At a minimum this information proved my theory, for my website anyway, that it's not the bots you know and can see that are the real problem, it's the bots you can't see.

With all this in mind, do you think you really know how many actual visitors and pages views your site gets?